Multi-armed bandit vs. A/B testing: Choosing the right experimentation framework

Published on November 05, 2025/Last edited on November 05, 2025/15 min read

Team Braze

Marketers need reliable ways to test ideas and adapt quickly to shifting customer behavior. Choosing between multi-armed bandit vs. A/B testing often comes down to speed, confidence, and the type of decision at hand. A/B testing delivers clear, statistically rigorous results, while multi-armed bandit testing reallocates traffic in real time to maximize performance during the run. Both approaches bring distinct advantages—and tradeoffs.

In this article, we’ll break down the differences between A/B testing and multi-armed bandit testing, highlight when each method makes sense, and explore hybrid models that combine rigor with adaptability. You’ll also see how Braze and BrazeAI Decisioning Studio™ give marketing teams the tools to run structured tests, optimize dynamically, and build toward reinforcement learning for personalization at scale.

Contents

What is A/B testing?What is multi-armed bandit testing?

Key differences of A/B testing vs bandit testing

When to use multi-armed bandit optimization

Real-life examples for A/B testing and bandit algorithms marketing

Dynamic experimentation: hybrid approaches that scale

How Braze and BrazeAI Decisioning Studio™ enable testing

Final thoughts on Multi-armed bandit vs. A/B testing

FAQs on Multi-armed bandit vs. A/B testing

What is A/B testing?

A/B testing is a fair, 50/50 split between a control and a variant. You pick something you want to test, for example, a header or a button placement and then choose how you’ll measure success—say CTR, CVR, or revenue per customer. Decide how many people you need in the test (your sample size), then run both versions at the same time. Since traffic stays even, you get a clean read on which one works better. You can also test multiple variants of the same test, like four different headers against the control. That’s why A/B tests are known for statistical rigor and clear decisions.

How A/B testing works

- Set a simple hypothesis and a primary KPI

- Split traffic evenly between A and B, and keep it steady

- Run the test long enough to reach the planned sample size

- Compare results, estimate incremental lift, and roll out the winner

Good for:

- Big changes you need to validate with confidence

- Brand-sensitive customer journeys where a consistent experience matters

- Marketing experiments where certainty matters more than speed

Heads-up:

- You need enough time and traffic for a reliable read—small samples can mislead

- There’s an opportunity cost while you test—some people will see a weaker option

- Don’t peek or tweak mid-run; stopping early can bias results

- Watch for outside noise (overlapping promos, seasonality) that can tilt the outcome

What is multi-armed bandit testing?

Multi-armed bandit (MAB) testing is adaptive testing. Using a machine learning algorithm, instead of holding a 50/50 split, it moves more traffic to the option that’s winning while the test is running. It balances exploration vs. exploitation—keep trying all options a little, but send more people to what looks best right now.

How multi-armed bandit testing works

- Start by trying all versions

- As results come in, shift more traffic to the leader

- Keep a small amount of traffic on the others so you don’t miss a late winner

- Use sticky bucketing, where you make sure that a returning user keeps seeing the same version

Good for:

- Time-sensitive optimization like flash sales, holiday promos, or daily send-time windows

- Many-variant tests when you want faster and continuous learning and less wasted traffic

- Situations where short-term outcomes matter more than a perfect ranking of every variant

Heads-up:

- Since traffic shifts, you’ll collect less data on weaker options, which can reduce certainty

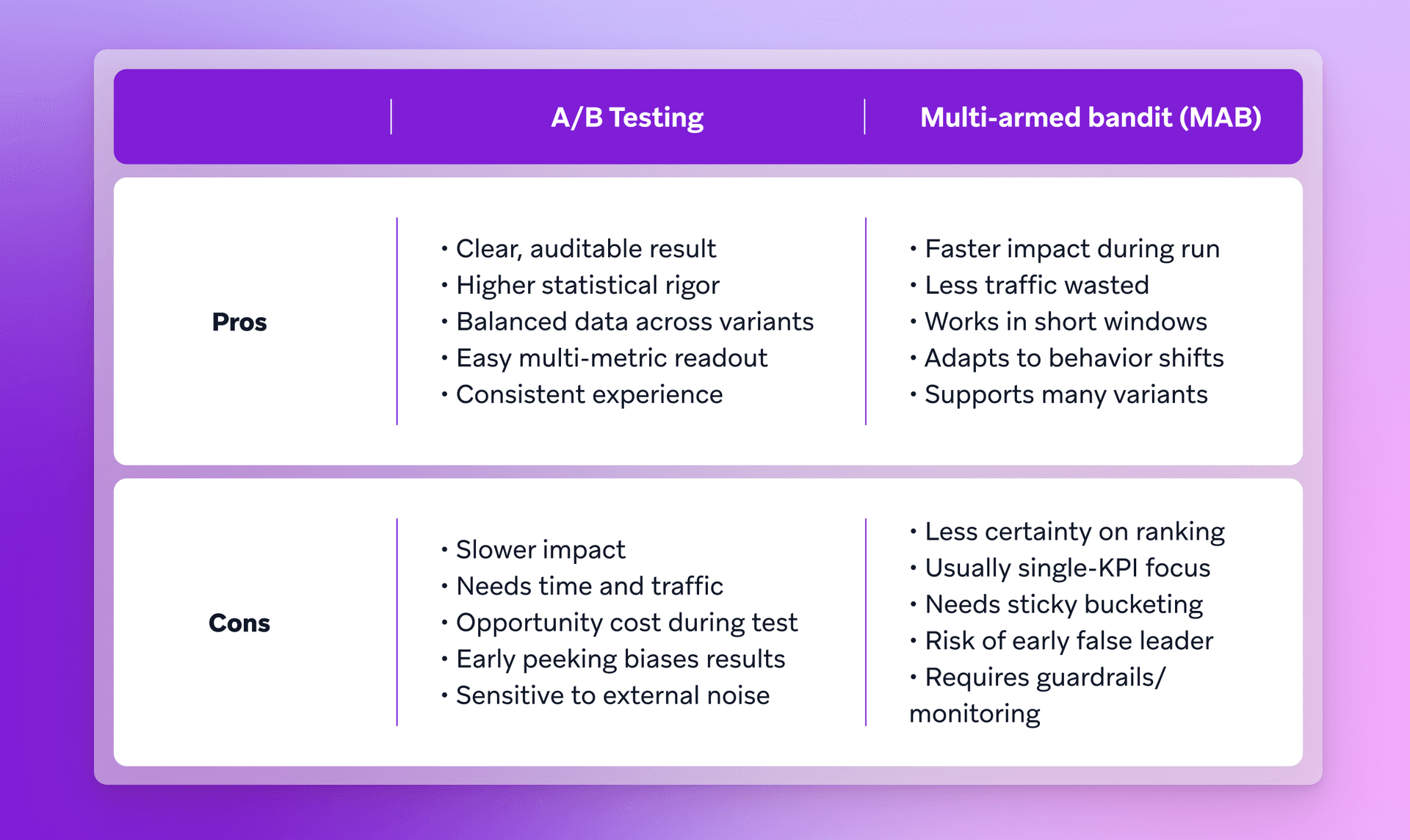

Key differences of A/B testing vs bandit testing

The main difference between A/B testing and multi-armed bandit testing is speed. A/B testing waits until enough data is collected to compare variants, which takes time and a steady split of traffic. Multi-armed bandit testing adapts while the test is running, moving more traffic to better performers based on real-time results.

Unlike traditional A/B tests, which usually compare one control against one variant, (or one type of variant), bandit testing can handle multiple versions at once and optimize them dynamically.

Speed vs. certainty

Bandit testing moves during the test, sending more people to the option that’s winning now—great for time-sensitive optimization. A/B tests hold a steady split until the planned sample size, which gives you higher statistical rigor and cleaner, auditable decisions.

Exploration vs. exploitation

A/B testing separates the steps. Explore first (even split), then exploit later (roll out the winner). Bandit testing does both at once—most traffic goes to the current leader, while a small share keeps testing others. The timing is the trade-off. Wait too long and you miss short-term wins and slow long-term gains. Move too soon and you risk locking in a false leader.

What you learn vs. what you win

A/B tests keep exposure balanced, so you get a fuller read across variants and segments, making incremental lift easier to quantify and explain. Bandit testing cuts wasted traffic on weaker options, but collects less data on them, so the final ranking can be less certain.

Metrics and guardrails

Both methods can track multiple KPIs. A/B’s even split often makes it simpler to read a primary KPI alongside guardrails in one snapshot. Bandit testing typically optimizes a single objective during the run—use guardrails or a composite score if you need more than one outcome to matter.

User experience

Bandit allocations shift mid-test, so add sticky bucketing to keep returning users on the same version. A/B tests naturally keep experiences consistent because the split doesn’t change during the run.

Bandit testing shines when you have multiple variants, limited traffic, or a short window, and they can be a step toward personalization at scale. A/B testing is stronger for high-stakes changes and strategic calls where you want a definitive read before rolling out widely.

When to use A/B testing

Use A/B testing when you need a reliable answer before making a lasting change. It’s especially useful for validating big shifts—like a redesign, new pricing, or updated navigation—where a wrong move could be costly. A/B testing is also a good choice for clear, binary comparisons, such as two versions of an ad or subject line, and for situations where you want confidence that the change you’re measuring is what’s driving the result.

Gut check (yes/no):

- Will this change be live for weeks or months?

- Do you want a single, clean read that shows the primary KPI and guardrails together?

- Can you run for at least a full business cycle without major promos colliding?

- Do stakeholders want an auditable “why,” not just a short-term bump?

- Do you have the traffic and time to reach the planned sample size?

Helpful set-up tips:

- Write a one-line hypothesis and commit to a stop rule (date or sample size)

- Keep a small post-rollout holdout (1-5%) to track incremental lift over time

- Use a single bucketing key (e.g., user ID) so exposure stays consistent across devices and channels

- Avoid overlapping tests aimed at the same users during the same window

Skip A/B if…

- The window is only a few days and you need results during the run

- You’re juggling many variants with thin traffic

- You must react to behavior that shifts hour by hour

When to use multi-armed bandit optimization

You should use multi-armed bandit testing when speed and adaptability matter most. Bandits reallocate traffic in real time, making them a strong fit for short campaigns or situations where fast learning drives impact. They balance the need to explore new options with the need to double down on what’s working, reducing the opportunity cost of waiting. And because they can handle multiple variants at once, bandits are well suited to continuous optimization without the need for constant monitoring.

Gut check (yes/no):

- Is the window short—days, not weeks?

- Does the primary KPI resolve quickly (e.g., CTR in minutes, CVR within a session/day)?

- Do you have several versions but not enough traffic for a clean even split?

- Will a single objective (or a composite score) work during the run, with simple guardrails on the rest?

- Do you expect behavior to drift across the window (early vs. late buyers, weekday vs. weekend)?

Helpful set-up tips:

- Keep a small share of traffic, always testing the other options (e.g., 5-10%) so you don’t lock into a false early leader

- Use sticky bucketing so returning users keep seeing the same version across devices

- Add basic guardrails (unsubscribe or complaint rate, margin, support tickets) and simple stop conditionsDo brief daily checks and keep a tiny always-on holdout or occasional fixed-split snapshot to estimate incremental lift

- Coordinate with other channels to avoid message collisions to the same audience

Skip bandit testing if…

- You need a precise ranking of every variant for long-term strategy

- The journey is compliance-sensitive and requires a fully audited read

- The goal is long-horizon (e.g., retention over months) without fast interim KPIs

Real-life examples for A/B testing and bandit algorithms marketing

Now let’s see how we can turn these concepts into practice. In the following case studies, you’ll see where A/B testing gives you a confident read you can stand behind, and where multi-bandit testing, also known as bandit algorithms marketing, helps you adapt during a live campaign for faster gains.

Countdown to conversion—Dafiti’s in-app promos hit fast wins

Dafiti, Latin America’s leading fashion and lifestyle eCommerce brand, set out to lift conversions and revenue while simplifying cross-channel execution. By consolidating messaging in Braze and adding gamified in-app moments, the team moved performance in days, not weeks.

The problem

Dafiti’s weekly promotions ran on separate tools for push and email, which made cross-channel coordination slow and blunt. Because the channels were siloed, the team could not A/B test consistently across both or segment by behavior in a meaningful way. The goal was to improve conversion metrics and revenue across multiple markets without adding operational drag.

The solution

Dafiti brought email and in-app messaging together in Braze to run coordinated promotions and structured tests. The team A/B tested a countdown offer in an in-app message against a version without a timer, then introduced a scratch-and-win game to re-engage virtual window shoppers alongside a broader engaged audience. Behavior-based segments guided who saw what, keeping each message relevant while the team gathered clean reads on performance.

The results

- 300% increase in revenue per user

- 82% increase in orders

- 43% rise in conversion versus control

- Countdown in-app message reached a 45% conversion rate versus control

- Scratch-and-win in-app message delivered an 85% lift in conversions and a 300% lift in revenue

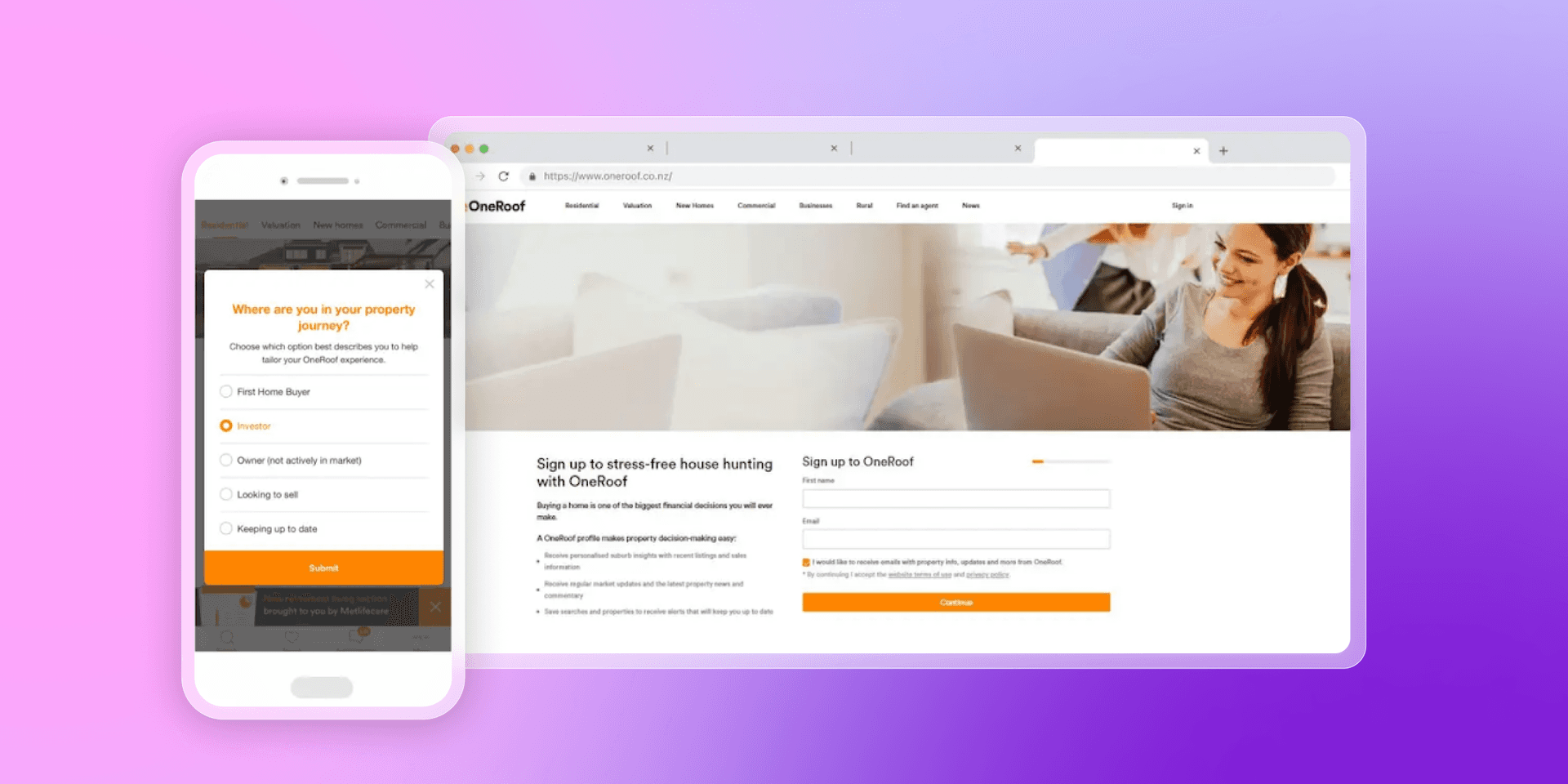

Timing wins—OneRoof lifts engagement with Intelligent Timing

OneRoof, New Zealand’s property destination, wanted faster, more relevant emails. By collecting simple preferences and using Braze Intelligent Timing to send when each person was most likely to engage, the team made email timing and content feel personal without extra engineering lift.

The problem

OneRoof’s marketers relied on developer-built customizations and API triggers to personalize, which slowed iteration. There were no live data integrations or custom attributes to tailor messages. Content was often generic and mismatched to a user’s location, and testing across audiences was hard to run and hard to learn from. The team needed a multi-channel setup that marketing could control, with timing and content that reflected real behavior and declared preferences.

The solution

OneRoof introduced a Profile Builder to capture intent, role, and preferred locations, then passed those attributes into Braze. They used Braze Intelligent Timing to pick send times based on each user’s past engagement and built localized newsletters and in-app messages using Connected Content and Liquid Personalization. Recommended Listings went out weekly with machine-learning–powered suggestions, and if a user hadn’t declared a suburb, inferred signals filled the gap. This approach aligns with a bandit-friendly pattern for send-time optimization, where traffic naturally shifts toward better-performing windows per user over time.

The results

- 23% increase in email click-to-open rate

- 77% Profile Builder completion rate, converting prospects to registered customers

- 218% uplift in total clicks to property listings

- 57% uplift in unique clicks

- One dynamic, localized newsletter matched the listing-page traffic of seven generic emails

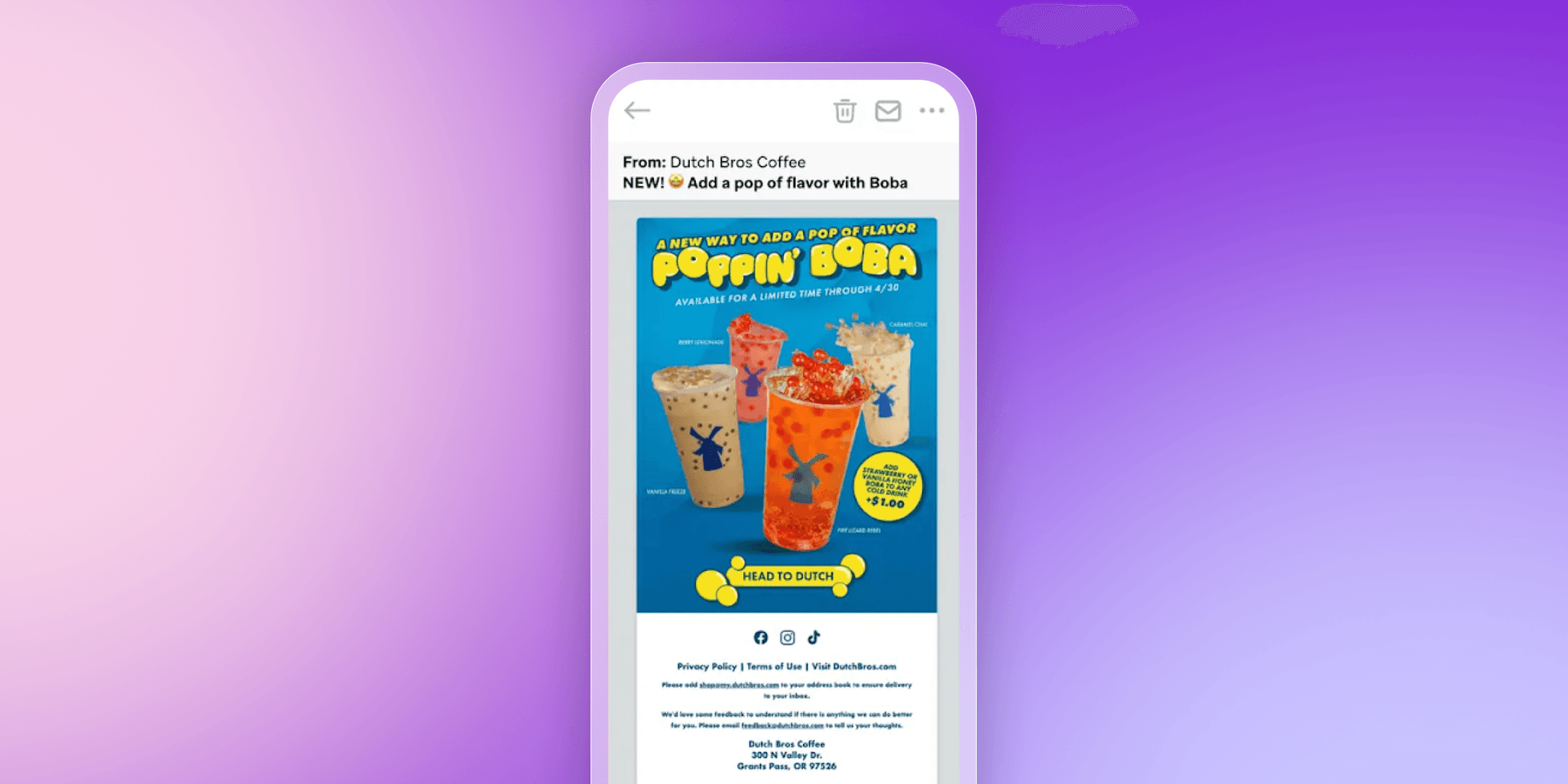

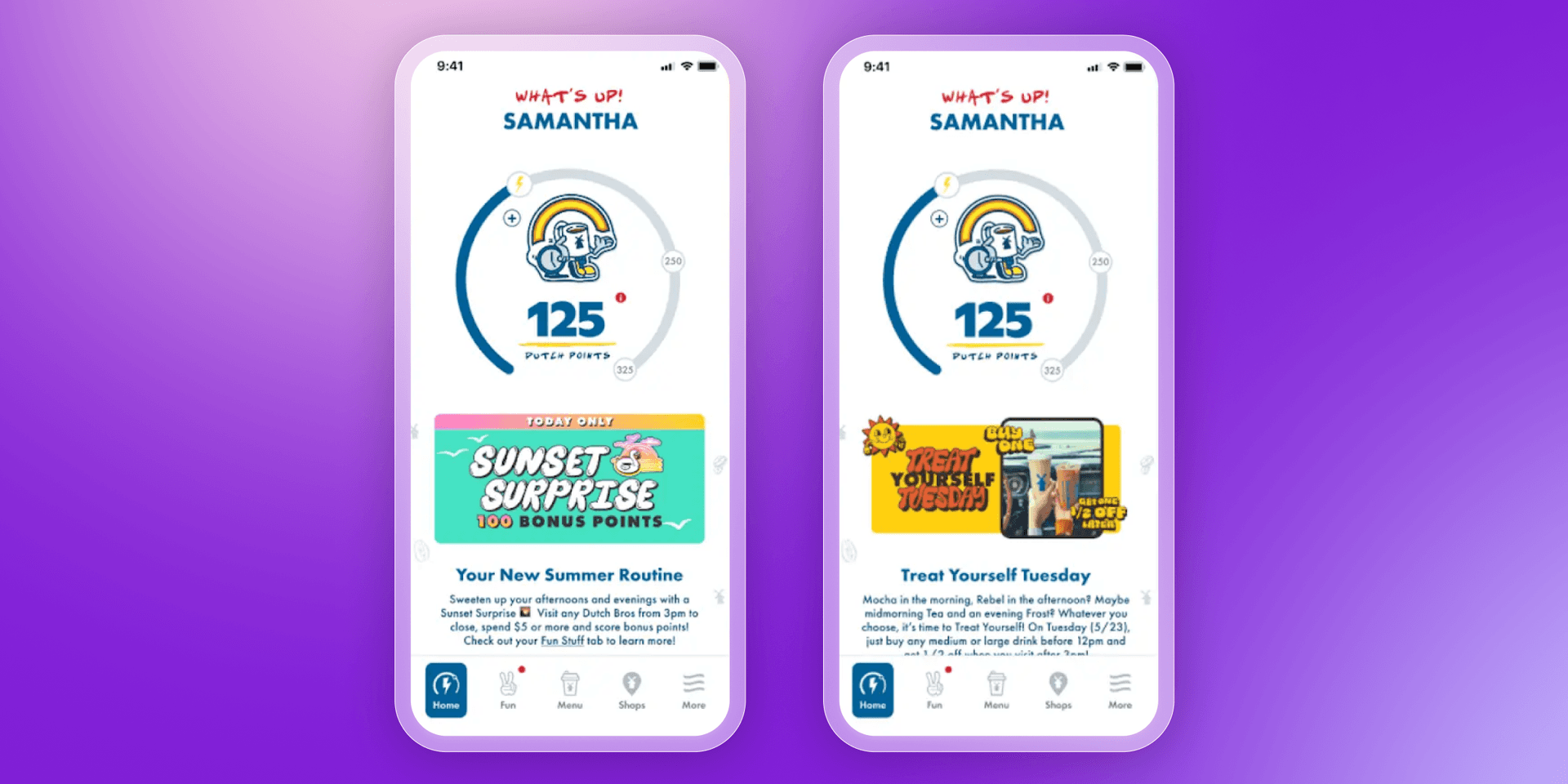

Preferences pour performance—Dutch Bros personalizes at scale

Dutch Bros is an Oregon-born beverage brand with more than 800 drive-thru locations across the United States. Known for a “relationship first” approach, the team wanted its app to feel as personal as a visit to the window. Dutch Bros turned to Braze to unify messaging across email, SMS, push, and in-app, and to deliver product-based personalization that speaks to each customer in real time.

The problem

Fragmented tools made consistent messaging hard and costly, and personalization depended on developer support. The team needed a single platform to run cross-channel campaigns from real-time data and iterate quickly.

The solution

Dutch Bros moved owned channels into Braze, using Canvas for journeys and Catalogs for location-aware products. With data flowing from Segment, they built product-specific messages tailored to preferences and recent behavior across channels, and operated campaigns more efficiently with TAM and Support guidance.

The results

- 230% increase in ROI from CRM campaigns

- 31% cost savings from platform consolidation

- Higher engagement and revenue from personalized, easier-to-manage campaigns

Dynamic experimentation: hybrid approaches that scale

Marketers don’t always need to choose between A/B testing and multi-armed bandit optimization. In practice, many brands combine the two, starting with structured tests to validate big ideas and then layering in adaptive approaches to maximize performance over time.

A common pattern looks like this:

- Validate with A/B testing. Use controlled experiments to test a major change—like a redesigned onboarding flow or new product messaging—so you have high confidence in the direction.

- Scale with adaptive testing. Once you know the winner, switch to a bandit-style setup that keeps optimizing in real time as conditions shift.

- Advance to reinforcement learning. With tools like BrazeAI Decisioning Studio™, testing moves beyond groups and applies at the individual level, continuously adapting to each customer’s behavior and context.

This hybrid model balances rigor with speed. You get the defensibility of statistically rigorous A/B testing, while also taking advantage of dynamic experimentation that captures short-term wins and adapts as customer behavior evolves.

How Braze and BrazeAI Decisioning Studio™ enable testing

Marketers don’t have to choose between the rigor of A/B testing and the adaptability of bandit testing. With Braze, you can run both approaches in one platform—and even take the next step with reinforcement learning.

Braze Canvas makes it easy to design clean A/B or multivariate tests. You can define holdout groups, set primary KPIs, and see results in clear dashboards. That gives teams confidence when validating major changes or isolating specific variables.

BrazeAI Decisioning Studio™ builds on this by applying reinforcement learning. Instead of testing once and stopping, the system runs continuous, adaptive experiments at the individual level. It reallocates messaging in real time, balancing exploration and exploitation automatically, to give personalization at scale that adapts as customer behavior shifts.

To keep tests meaningful and safe, Braze provides built-in guardrails—frequency caps, sticky bucketing for consistent user experience, and preview/approval tools before launch. Observability features also help teams measure incremental lift across KPIs like CTR, CVR, and LTV.

Together, these capabilities mean marketers can validate with confidence, adapt with speed, and continuously learn—all in one place.

Final thoughts on multi-armed bandit vs. A/B testing

Both A/B testing and multi-armed bandit optimization have a role in modern marketing. A/B testing delivers clarity and statistical rigor—ideal when you need a high-confidence answer before scaling a change. Multi-armed bandit testing trades some certainty for speed, dynamically pushing traffic toward what’s working in the moment.

With Braze, you don’t need to choose one over the other. You just need to know which method fits your goal, timeline, and level of risk tolerance. You can test big ideas with A/B, optimize campaigns dynamically with multi-arm bandit testing, and evolve toward continuous reinforcement learning that adapts at the individual level.

See how Braze helps you combine A/B testing and multi-armed bandit testing into one adaptive framework that drives real growth.

FAQs on multi-armed bandit vs. A/B testing

What is the difference between multi-armed bandit and A/B testing?

The difference between multi-armed bandit testing and A/B testing is how traffic is allocated. A/B testing keeps an even split until the test ends, while multi-armed bandit testing shifts traffic toward the best performer as results come in.

When should I use multi-armed bandit testing vs. A/B testing?

You should use multi-armed bandit testing when you need fast optimization, like during short campaigns. You should use A/B testing when you want high confidence results before making a big change.

What are the pros and cons of multi-armed bandit testing in marketing?

The pros of multi-armed bandit testing in marketing are faster learning, reduced wasted traffic, and better short-term outcomes. The cons are less certainty for weaker options and a heavier focus on one KPI.

How can marketers balance rigor with speed in experimentation?

Marketers can balance rigor with speed in experimentation by using both methods. A/B testing provides confidence for big decisions, while multi-armed bandit testing keeps campaigns adapting in real time.

Is multi-armed bandit testing the same as reinforcement learning?

Multi-armed bandit testing is not the same as reinforcement learning. Bandit testing focuses on reallocating traffic between options, while reinforcement learning is more advanced and adapts decisions at the individual level.

How do Braze and BrazeAI Decisioning Studio™ combine A/B and MAB methods?

Braze and BrazeAI Decisioning Studio™ combine A/B and MAB methods by offering both approaches. Braze supports clear A/B and multivariate tests, while BrazeAI Decisioning Studio™ applies reinforcement learning for continuous optimization.

Related Tags

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

Article3 min read

Article3 min read2.4+ billion sends, zero fumbles: How Braze supports leading brands during the big game

February 09, 2026 Article4 min read

Article4 min readBeyond Predictions: Why Your Personalization Strategy Needs an AI Decisioning Agent

February 09, 2026 Article6 min read

Article6 min readThe OS and inbox as intermediary: How AI is (literally) rewriting customer engagement

February 06, 2026