The Mobile Marketer’s Monthly To-Do List

Published on July 25, 2016/Last edited on July 25, 2016/6 min read

Team Braze

Digital marketers have a lot on their plate. When the overarching goal is “keep customers, make money” the answer often becomes churning out campaigns at a breakneck pace, looking for whatever works best, and then honing in on that with single-minded focus.

You may be after newsletter signups, in-app purchases, push opt-ins, or getting users to share your product or service in their social space—or after all of that at once, and more. Some campaigns you’ll run for upwards of six months. Others might run for just a few days. Either way, it’s your responsibility to plan and manage them all, give each its due attention, and keep your eye on what’s next.

It can be a lot to manage. In fact, it can be the very definition of that office-y truism: lots of moving pieces.

But when does the mad dash end? When do you look at your incoming results and decide where to go next? If the answer to that for you is “unfortunately, not often,” this post is for you. Here we’re taking a strategic breather. We’ll cover which considerations are the most valuable to look at each month and how to boil down the task of running multiple campaigns at once.

Take a look, bookmark this page for later, and make a date to come back 30 days from now.

Engagement questions to ask every month

1. How is your onboarding processes performing?

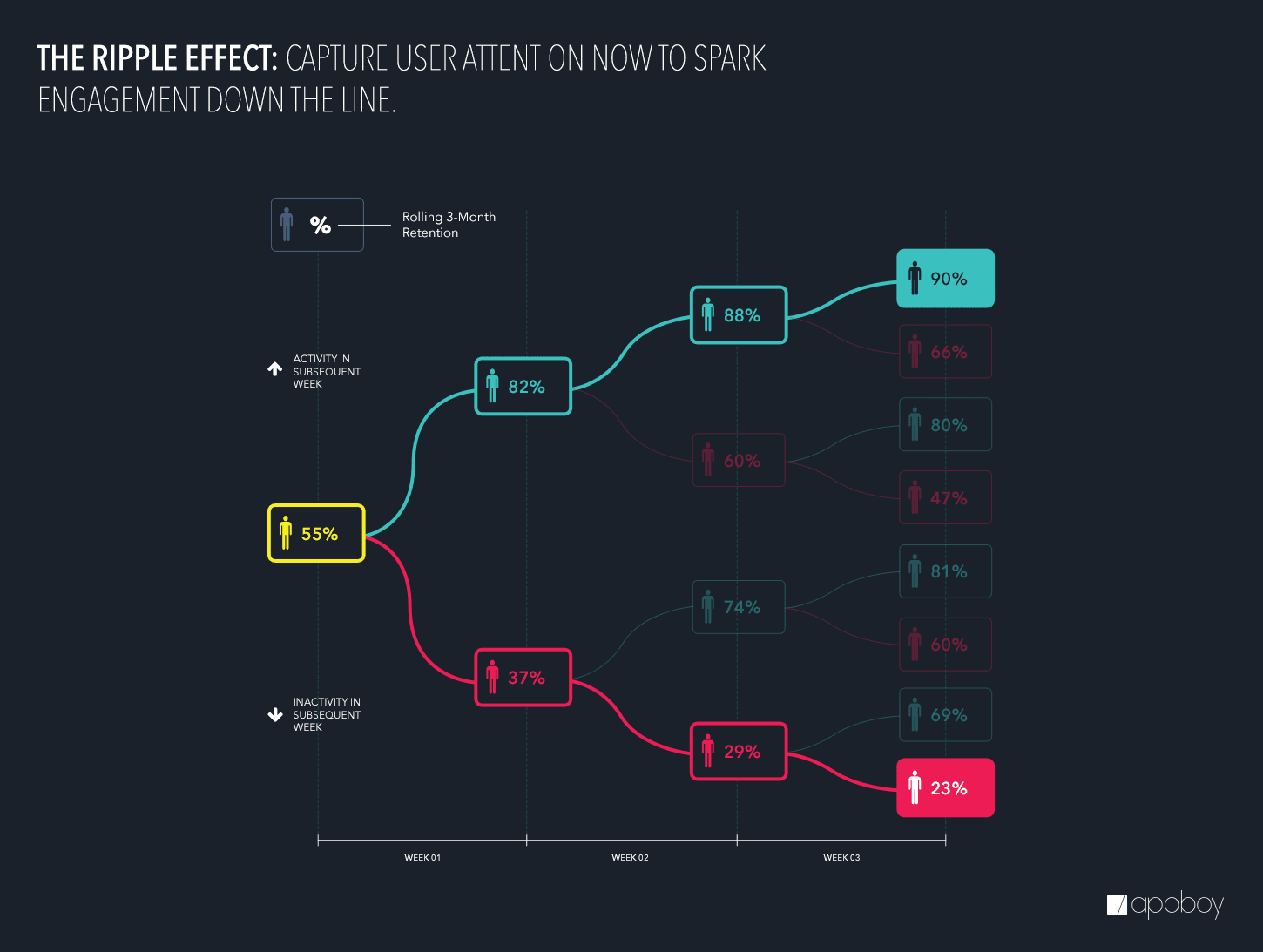

When it comes to apps, customers who engage once a week in the first four weeks after download have a 90% retention rate over three months. That’s a heavy duty metric, and it means your onboarding process (whether for your site, email newsletter, or whatever) needs to be on point. Check in on your performance metrics here. Are users getting stuck at a certain spot? Is one channel of outreach (email vs. push, say) getting more engagement? Pay a visit to our onboarding checklist for ideas on how to optimize this crucial campaign.

2. Are your engagement goals the same as last month?

Take a moment to reevaluate what engagement means in terms of your larger business goals. What are your current campaigns optimized for, and does that still make sense for your company? If not, what should be changed? Can you adjust your approach to better point toward your goals?

3. Are you driving the behavior you hope to drive?

You started each campaign with a specific user behavior outcome in mind. You had a map to your goal, and you wanted to usher your customers through a certain trajectory. Are your customers playing along? Or are they doing something else, going in their own direction? Is there value in where they’ve ended up? Can you adjust the campaign to capture them where they are now?

4. Do you want to stick with your original channel choices?

You began each campaign with certain channels in mind. Are they still working well? Is there perhaps an opportunity to drop one channel, or bring in another, to streamline your campaign or give it a boost?

Content questions to ask every month

1. What’s coming up in the calendar?

Consider what’s happening in the upcoming month that could drive more engagement with your audience. Messages tend to perform better when they’re sent in context. Calendar events offer plenty of opportunities to capitalize off holidays and seasonal trends to push out messaging that’s more relevant to your users, but scrambling at the last minute is never fun.

2. Is your brand voice consistent across all campaigns and all channels?

Different campaigns will call for different tones, but overall, there should be a consistent and solid through-line that keeps your brand voice recognizable. Is your voice feeling unified across all your platforms? One way to test this is to sign up for all of your outreach—and then be a customer for a couple weeks. You may notice copy or messages that need attention.

Metrics questions to ask every month

1. Are your segmenting options as powerful as you want them to be?

Are your users sharing the information you need them to share to segment them effectively? If not, how can you ask for their information, and encourage them to actually share it? Think about the value that the customer gets in sharing their data, and how you can convey that to them at the opportune time.

2. What have you learned from your multivariate campaigns?

You don’t want to run the same tests again and again. That’s a waste of resources, and yields diminishing returns. So, when you have your results, gather your key takeaways and move on. Check in on your results each month and plan your next steps with these findings in mind.

3. What are the trends you’re seeing in MAUs and DAUs?

Day by day, MAUs and DAUs may fluctuate greatly, and patterns can be hard to see. But month over month, you’ll be better able to spot usage trends and see if your engagement rates are growing or shrinking over the long term. You can build out retention campaigns that encourage customers to come back to your app or site.

DAU= # of individual users who open your app in a dayMAU= # of individuals who used your app in the last 30 days

or

= # of individuals who used your app in a given month

Example: If your app has been used 30,000 times by 15,000 people in the last 30 days, your MAU is 15,000

4. Can you build or update a monthly report for your team and company?

A regular report gives you the opportunity to highlight your team’s work in front of the larger company. It gives your team, and your colleagues in other departments, the chance to ask questions and make requests. It can also serve as an invitation of sorts, to encourage your colleagues to share their knowledge base with you in ways that might dovetail with your findings. Plus, it’ll help build relationships internally.

What to put in your report:

- Tout the marketing team’s accomplishments in the past month.

- Orient everyone around what should happen next.

- Pull examples of campaigns and messages that performed well.

- Compare those to the equally useful information about what didn’t work as well.

- Give examples of where users got stuck, where they engaged, and where those metrics have changed since the prior month.

- Share useful data with other teams. For example, the product team might be interested to see shifts in device and platform data.

When to end a campaign

It’s easy to know when a one-off campaign has ended. Ongoing campaigns, on the other hand, should typically end when you understand that one variant in a test is clearly outperforming the others. In this case, the winner sticks around, the losing variant falls to the wayside, and some of your bandwidth is freed up to run tests and plan new initiatives elsewhere. A campaign might also end when, despite testing, no statistical difference is revealed between your test and its control group. In that case, it might be time to test new variants, or to focus on other pain points entirely.

Be Absolutely Engaging.™

Sign up for regular updates from Braze.

Related Content

View the Blog

Data-Driven Personalization Heralds a New Era of eCommerce with Braze and Shopify

Ankit Shah

What is marketing orchestration?

Team Braze

Customer acquisition strategies: What they are, why they matter, and how to master them